Load balancing is a technique to distribute workload evenly across two or more computers, devices, network links, CPUs, hard drives, or other resources, in order to get optimal resource utilization, maximize throughput, minimize response time, and avoid overload. Using multiple components with load balancing, instead of a single component, may increase reliability through redundancy. The load balancing service is usually provided by a dedicated program or hardware load balancing device (such as a multilayer switch or a DNS server).

Internet: One of the most common applications of load balancing is to provide a single Internet service from multiple servers, sometimes known as a server farm. Commonly, load-balanced systems include popular web sites, large Internet Relay Chat networks, high-bandwidth File Transfer Protocol sites, Network News Transfer Protocol (NNTP) servers and Domain Name System (DNS) servers.

For Internet services, the load balancer is usually a software program that is listening on the port where external clients connect to access services. The load balancer forwards requests to one of the “backend” servers, which usually replies to the load balancer. This allows the load balancer to reply to the client without the client ever knowing about the internal separation of functions. It also prevents clients from contacting backend servers directly, which may have security benefits by hiding the structure of the internal network and preventing attacks on the kernel’s network stack or unrelated services running on other ports.

Some load balancers provide a mechanism for doing something special in the event that all backend servers are unavailable. This might include forwarding to a backup load balancer, or displaying a message regarding the outage.

An alternate method of load balancing, which does not necessarily require a dedicated software or hardware node, is called round robin DNS. In this technique, multiple IP addresses are associated with a single domain name (e.g. www.example.org); clients themselves are expected to choose which server to connect to. Unlike the use of a dedicated load balancer, this technique exposes to clients the existence of multiple backend servers. The technique has other advantages and disadvantages, depending on the degree of control over the DNS server and the granularity of load balancing desired.

Load balancing device features 🔗

Hardware and software load balancing devices can come with a variety of special features.

- Asymmetric load: A ratio can be manually assigned to cause some backend servers to get a greater share of the workload than others. This is sometimes used as a crude way to account for some servers being faster than others.

- Priority activation: When the number of available servers drops below a certain number, or load gets too high, standby servers can be brought online

- SSL Offload and Acceleration: SSL applications can be a heavy burden on the resources of a Web Server, especially on the CPU and the end users may see a slow response (or at the very least the servers are spending a lot of cycles doing things they weren’t designed to do). To resolve these kinds of issues, a Load Balancer capable of handling SSL Offloading in specialized hardware may be used. When Load Balancers are taking the SSL connections, the burden on the Web Servers is reduced and performance will not degrade for the end users.

- Distributed Denial of Service (DDoS) attack protection: load balancers can provide features such as SYN cookies and delayed-binding (the back-end servers don’t see the client until it finishes its TCP handshake) to mitigate SYN flood attacks and generally offload work from the servers to a more efficient platform.

- HTTP compression: reduces amount of data to be transferred for HTTP objects by utilizing gzip compression available in all modern web browsers

- TCP offload: different vendors use different terms for this, but the idea is that normally each HTTP request from each client is a different TCP connection. This feature utilizes HTTP/1.1 to consolidate multiple HTTP requests from multiple clients into a single TCP socket to the back-end servers.

- TCP buffering: the load balancer can buffer responses from the server and spoon-feed the data out to slow clients, allowing the server to move on to other tasks.

- Direct Server Return: an option for asymmetrical load distribution, where request and reply have different network paths.

- Health checking: the balancer will poll servers for application layer health and remove failed servers from the pool.

- HTTP caching: the load balancer can store static content so that some requests can be handled without contacting the web servers.

- Content Filtering: some load balancers can arbitrarily modify traffic on the way through.

- HTTP security: some load balancers can hide HTTP error pages, remove server identification headers from HTTP responses, and encrypt cookies so end users can’t manipulate them.

- Priority queuing: also known as rate shaping, the ability to give different priority to different traffic.

- Content aware switching: most load balancers can send requests to different servers based on the URL being requested.

- Client authentication: authenticate users against a variety of authentication sources before allowing them access to a website.

- Programmatic traffic manipulation: at least one load balancer allows the use of a scripting language to allow custom load balancing methods, arbitrary traffic manipulations, and more.

- Firewall: direct connections to backend servers are prevented, for network security reasons

- Intrusion Prevention System: offer application layer security in addition to network/transport layer offered by firewall security.

Load balancing device failover 🔗

Load balancing is often used to implement failover — the continuation of a service after the failure of one or more of its components. The components are monitored continually (e.g., web servers may be monitored by fetching known pages), and when one becomes non-responsive, the load balancer is informed and no longer sends traffic to it. And when a component comes back on line, the load balancer begins to route traffic to it again. For this to work, there must be at least one component in excess of the service’s capacity. This is much less expensive and more flexible than failover approaches where a single “live” component is paired with a single “backup” component that takes over in the event of a failure. Some types of RAID systems can also utilize hot spare for a similar effect.

Products 🔗

- A10 Networks

- Array Networks

- Avaya

- Barracuda Networks

- Brocade Communications Systems

- CAI Networks

- Cisco Systems

- Citrix Systems

- Coyote Point Systems

- Crescendo Networks

- Double-Take (NSI Product)

- Ecessa

- Elfiq Networks

- F5 Networks

- jetNEXUS

- KEMP Technologies

- Nortel Networks

- Foundry Networks

- Peplink

- PineApp

- PIOLINK

- Radware

- Resonate

- Stonesoft

- Strangeloop Networks

- Ulticom

- Zeus Technology

- Layer 7 XML Gateway and Load Balancing Device

[Part of text content as of Wikipedia, please see Autors and the possibility to edit the original text of the article. The text of Wikipedia and the Text of this page run unter the GNU Free Documentation License.]

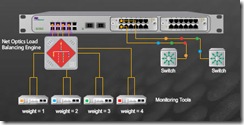

Also see http://us.loadbalancer.org/products.php or the nice and informative load balancing device animation at http://www.network-taps.eu/products/products_director_flash.html - just click on (skip intro) and “Dynamic load balancing” to run the animation.

Load Balancing Device Information and Product Overview 🔗

Load Balancing ist eine Technik, um die Arbeitslast gleichmäßig auf zwei oder mehr Computer, Netzwerklinks, CPUs, Festplatten oder andere Ressourcen zu verteilen, um eine optimale Ressourcennutzung zu erreichen, den Durchsatz zu maximieren, die Antwortzeit zu minimieren und eine Überlastung zu vermeiden. Diese Technik wird oft verwendet, um eine einzelne Internetdienstleistung von mehreren Servern bereitzustellen, manchmal als Serverfarm bezeichnet. Typische Systeme, die load balanced sind, umfassen beliebte Websites, große Internet Relay Chat-Netzwerke, Hochbandbreiten-File-Transfer-Protocol-Sites, Network News Transfer Protocol-Server und Domain Name System-Server.

Im Jahr 2024 ist Load Balancing immer noch eine wichtige Technik, um die Zuverlässigkeit von Netzwerken zu erhöhen und eine optimale Nutzung der Ressourcen zu gewährleisten. Die Lastenausgleichsdienste werden in der Regel von dedizierten Programmen oder Hardwaregeräten wie einem Multilayer-Switch oder einem DNS-Server bereitgestellt. Eines der häufigsten Anwendungsgebiete von Load Balancing ist nach wie vor die Bereitstellung einer einzelnen Internetdienstleistung von mehreren Servern.

Eine alternative Methode des Lastenausgleichs, die nicht unbedingt ein dediziertes Software- oder Hardwaregerät erfordert, wird als Round-Robin-DNS bezeichnet. Diese Technik weist mehrere IP-Adressen einer einzelnen Domain zu, und die Clients selbst wählen aus, mit welchem Server sie eine Verbindung herstellen möchten.

Load Balancing-Geräte können eine Vielzahl von speziellen Funktionen aufweisen, wie asymmetrische Last, Prioritätsaktivierung, SSL-Offloading und Beschleunigung, Schutz vor Distributed-Denial-of-Service-Angriffen, HTTP-Komprimierung, TCP-Offload, TCP-Buffering, Direct Server Return, Health-Checks, HTTP-Caching, Inhaltsfilterung, Firewall-Sicherheit, Intrusion Prevention System, Failover-Implementierung und vieles mehr.

Verschiedene Unternehmen bieten Load Balancing-Produkte an, darunter A Networks, Array Networks, Cisco Systems, Citrix Systems, Barracuda Networks und viele andere. Diese Produkte bieten eine Vielzahl von Funktionen, um die Netzwerksicherheit und -leistung zu verbessern.

Insgesamt bleibt Load Balancing auch im Jahr 2024 ein wesentlicher Bestandteil der Netzwerkinfrastruktur, um eine effiziente Verteilung der Arbeitslast und eine erhöhte Zuverlässigkeit zu gewährleisten. Neue Technologien und Innovationen in diesem Bereich können jedoch weitere Verbesserungen und Funktionalitäten bieten.

Quelle: Wikipedia